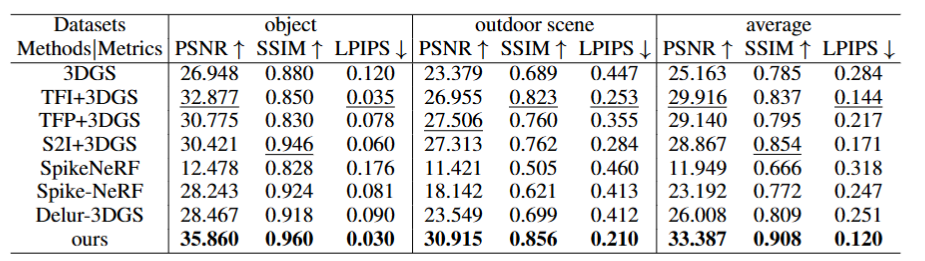

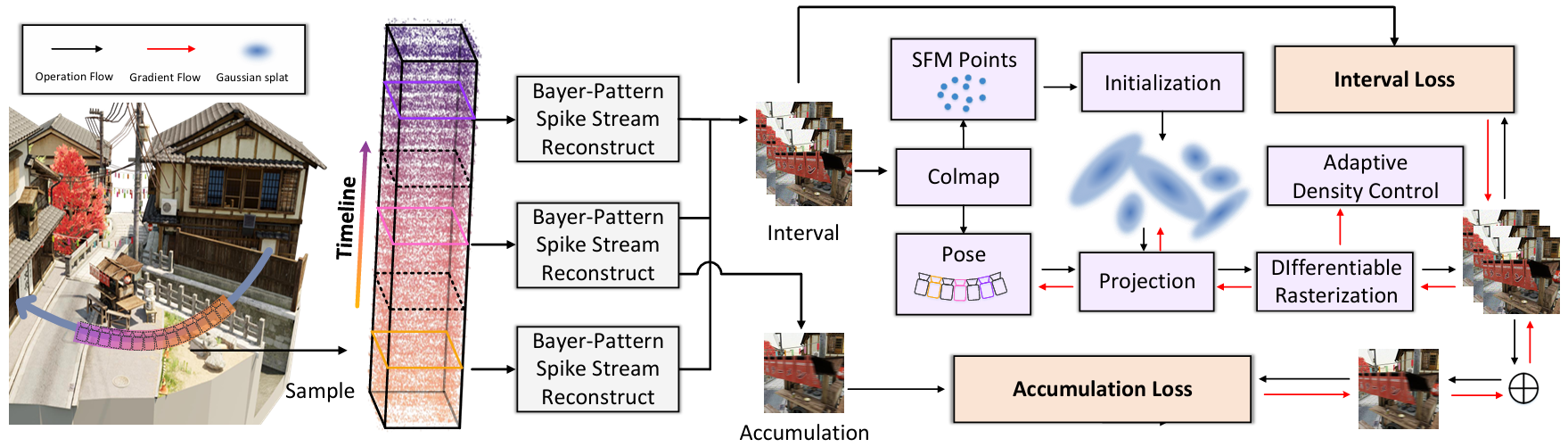

We first reconstruct Bayer-pattern spike streams into spike intervals and spike accumulation. Unlike 3DGS, we adopt spike intervals to initialize SFM points, camera poses, and Gaussian splats. We then embed the time accumulation process into the rasterizer to calibrate the colorization while maintaining multi-view consistency. By progressively optimizing the 3DGS parameters using an accumulation loss and an interval loss, our method facilitates high-quality 3DGS reconstruction.